Workshop: Machine learning for sound makers – RAVE

Published

BEK invites participants to a new online workshop on the topic of machine learning for sound makers, as part of our collaboration series with Notam: “Workshops for Advanced Users”.

Workshop dates: 20–24 June

Registration deadline: 31 May

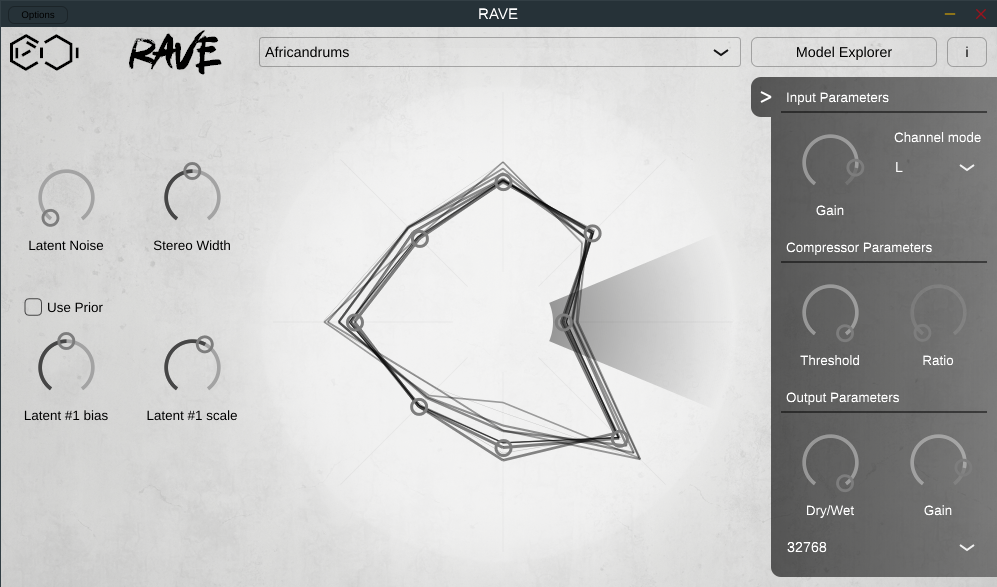

This workshop will focus on the recently developed software / machine learning model RAVE (Realtime Audio Variational autoEncoder), and is led by the main developer Antoine Caillon, part of the ACIDS group (“Artificial Creative Intelligence and Data Science”) at Ircam in Paris.

The RAVE model is a novel way to integrate machine learning and audio into a creative workflow, both as a Max/MSP object and as a VST plugin, where the previously trained model on a dataset of sounds can be manipulated in realtime. The first day of the workshop will focus on the use of these objects/plugins in the participants own creative workflow. The second day will be an introduction to making your dataset and training setup. (Since the training itself is very time and hardware intensive it will be an ongoing process for the participants to follow up on after the workshop itself). The rest of the week will be organised with participants working on their own projects with daily meetups and Q&A.

The use of deep learning based algorithms for artistic purposes has skyrocketed in recent years. Many examples can be found in image generation, sound design, style transfer or autonomous creation. This workshop discusses the use of these algorithms in the context of neural sound synthesis and real-time interaction, from a conceptual viewpoint to a practical use case. The recent RAVE model will then be presented as a way to make the current state of the art compatible with real-world constraints on synthesis speed and quality.

More on RAVE at Github

Article on RAVE at Arxiv

Some basic knowledge of Max/MSP and programming is required. To register, please send an email to espene@bek.no with subject: RAVE, with a short bio and a short description of your interest in and possible previous knowledge of the subject. The workshop is free to attend, but because of limited spaces has a € 50 cancellation fee. Also for the option of training your own model you will need to use either your own hardware or use cloud computing (datacrunch.io, Google Colab Pro etc.) which may cost extra that is not covered by BEK. The workshop will be held in English.

About the workshop series: Workshops for advanced users

Antoine Caillon

Antoine Caillon holds a master’s degree in Acoustics, Signal Processing and Computer Science applied to Music (ATIAM, 2019), and is currently a PhD student in the Ircam-STMS Musical Representations team. He studies hierarchical temporal learning for multi-instrument audio neural synthesis and develops different models and applications for artistic use. In this context, he collaborates with composers in residence at Ircam to create new interactions and experiences based on deep learning.